Docker – Deploying WebApps on Docker

Docker applies abstraction at the software layer of the operating system to create an instant and portable environment that includes everything you need: a Linux kernel, libraries, system tools like grep or sshfs, plus applications (web servers/services) and all dependencies needed by those applications (e.g., database engines). When deployed, these containers are all bundled up and run in a single, isolated process. The benefits stem from using containers rather than virtual machines and enabling you to run the exact same application production environment on different servers without modifying it (or without having to purchase servers for every deployment).

Running Your Web Apps in Docker Containers

Using the right tools ensures that you are going to be delivering a great user experience, and if you want that experience to be easier and more consistent across all your environments, you should consider Docker. The developer community has been successful in growing open-source tools and frameworks in many industries because they allow companies to rapidly iterate on their products without having to go through expensive tooling investments. The environments Docker creates are not the same as virtual machines, but the benefits you will see when you use them should outweigh these costs.

-

Containerization vs Virtualization: Docker uses “containers” for its containers. Containers are basically a mechanism for labeling resources with a namespace. For example, an application’s database and all of its files would be stored under a container’s file system namespace. What this means is that Docker makes it easier to deploy your applications on any machine. Docker also provides isolation between containers and makes life easier for deployment automation tooling by providing a safe, consistent environment to run in (the Docker daemon creates this environment automatically).

-

Single Process Containers versus Multiple Process Containers: Docker is not a single-process containerization framework. It does not automatically create processes for each of your applications. Instead, it creates a container for each running application and allows you to manage a process tree. Applications can still be written as multiple processes, and Docker will intelligently link them together (in the same way that multiple processes are linked together under the same application).

-

Built by Docker, Not for Stopping Docker: Docker currently does not have great integration with other environments or systems like Google App Engine or Heroku. Docker is focused on the developer, and it is important to think about how your application will be run. Do you want to run your app in a secure, robust environment? If so, Docker is not for you.

-

No support for lots of containers: Docker currently has only limited support for scaling and auto-scaling containers across multiple hosts. Additionally, Docker does not do any type of load balancing or failover. These are important features in production environments because they allow you to scale out as needed without having to manually rebuild all of your containers (failure and availability). Support for this kind of orchestration will come with time, though.

Prerequisite

Docker is an open-source containerization platform for apps and services. It allows developers to build, ship, and run any application in a consistent, reproducible way across whatever machines they choose. It was developed by Solomon Hykes in 2013 while working at dotCloud as a way to speed up their development process.

-

Docker Images: Instead of working directly with an application’s code, developers work with something called “Docker images,” which are templates for building out containers—full environments where containers can be started, stopped, and otherwise manipulated. These containers are what is actually executed on the server.

-

Containers: Containers are lightweight, resource-isolated instances that run in parallel to other containers. They can be started and stopped quickly and do not require any additional resources to run. Each container is an isolated process running within a single kernel (OS process). These processes can share resources with other containers running on the same host, but they cannot communicate directly by address space or sockets. The host namespaces provide a way to share host system resources without compromising the isolation of containers.

-

Docker Compose: Docker Compose is a tool that helps you define, build and run Docker applications from an easy-to-use YAML file. By taking advantage of the Docker remote API, Composer makes it super simple to create and manage your applications.

When should Docker be used ?

Docker is not a replacement for virtual machines, but rather a way for you to use containers and host your larger web applications closer to their users. Docker’s greatest advantage is that it can help you scale out your applications, which means more instances running at any given time. It also provides isolation between containers so that they don’t step on each other.

Why Web Apps ?

Web applications are the most prevalent types of applications built in the 21st century, and they are a great way for organizations to expand their reach and increase their revenue. Web apps have the potential to become more convenient and seamless than ever with services like Google Docs or Salesforce.com. You may want to take advantage of Docker in order to do this.

When Not To Use Docker ?

You might want to consider using Docker if you need to run multiple instances of your application on a single host. You may also want to look into other tools like systemd containers support, rkt (pronounced as “rocket”, a CLI tool for running app containers on Linux) written from scratch for security and ease of use, or lightweight Hypervisor VirtualBox.

Step-By-Step Processes to Deploy WebApps On Docker: Complete Tutorial

First we need to install the docker on the servers for that refer to the following

Step 1: Creating Dockerfile

Dockerfiles are meant to be used from within the terminal, in a text editor, or on the command line. Compilers include support for defining programs by embedding a text representation of an executable image into a Dockerfile. The result is an entirely self-contained container image. However, it opens you up to vulnerabilities that could potentially give outside parties access to your source code or private keys if someone were to hack or brute-force your Docker daemon.

FROM tomcat:8.0.43-jre8

COPY target/helloworld.war /usr/local/tomcat/webapps/

EXPOSE 8080

RUN chmod +x /usr/local/tomcat/bin/catalina.sh

CMD ["catalina.sh", "run"]

-

FROM tomcat:8.0.43-jre8: Specifies the base image to use, which is Tomcat version 8.0.43 with Java Runtime Environment (JRE) version 8.

-

COPY target/helloworld.war /usr/local/tomcat/webapps/: The

COPYcommand moves thehelloworld.warfile from the localtargetfolder into thewebappsdirectory of Tomcat within the Docker container, allowing Tomcat to deploy the application automatically. -

EXPOSE 8080: Informs Docker that the container listens on port 8080 at runtime. This does not actually publish the port; it serves as documentation for users about which ports are intended to be published.

-

RUN chmod +x /usr/local/tomcat/bin/catalina.sh: This command ensures the

catalina.shscript has execute permissions, allowing Tomcat to start properly inside the container. -

CMD ["catalina.sh", "run"]: Specifies the command to run when the container starts. In this case, it runs the catalina.sh script with the argument run, which starts Tomcat and makes it serve the deployed web application

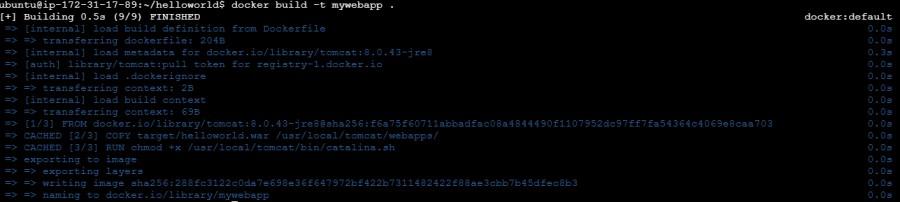

Step 2: Containerize your application

Build a Docker Image of your web app.

docker build -t [image name] .

docker build -t mywebapp .

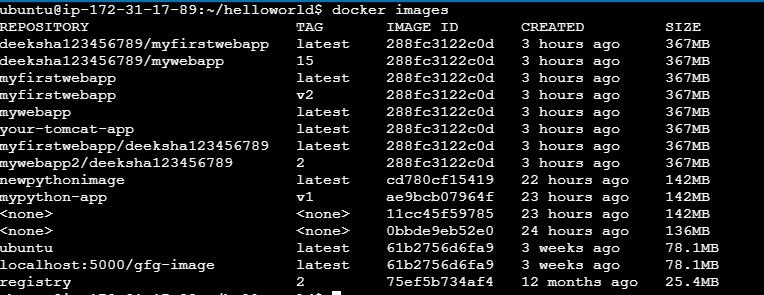

List the Docker Images

docker images

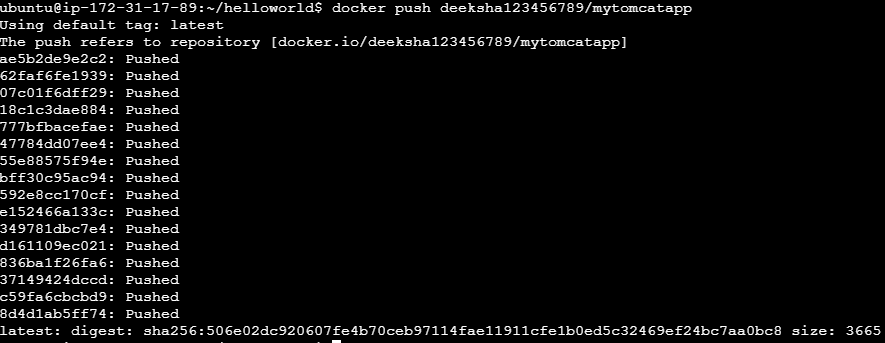

Step 3: Push the docker image to a docker repository

Login to docker hub

docker login

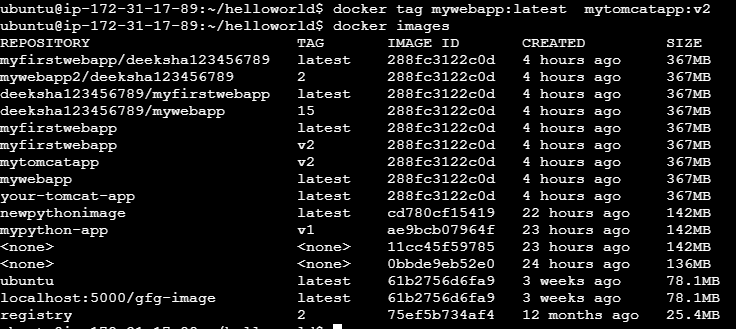

Retag docker image

docker tag [existing_image_name:tag] [new_image_name:tag]

Push docker image

docker push username/image_name

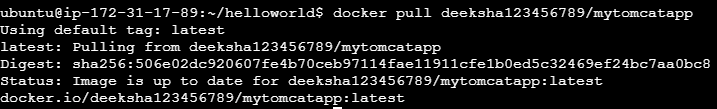

Step 4: Pull the docker image and run in Linux

To pull and run a Docker image in Linux, use the docker run command with the image name. This command automatically pulls the image if it’s not available locally and starts a container based on that image.

Pull the image from the hub using the below command

docker pull username/image_name

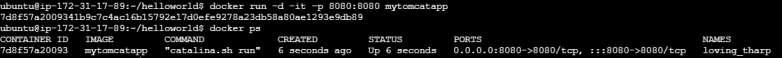

Run the docker image using the below command as shown image below.

docker run -d -it -p 8080:8080 image_name:tag

This docker run command creates and runs a new Docker container based on the specified image. Let’s break down the options:

-

-i: Keeps STDIN open even if not attached.

-

-t: Allocates a pseudo-TTY, which allows you to interact with the container’s shell.

-

-d: Detaches the container and runs it in the background.

-

-p 8080:8080: Maps port 8080 of the host machine to port 8080 of the container.

-

image_name:tag: Specifies the name and tag of the Docker image used to create the container.

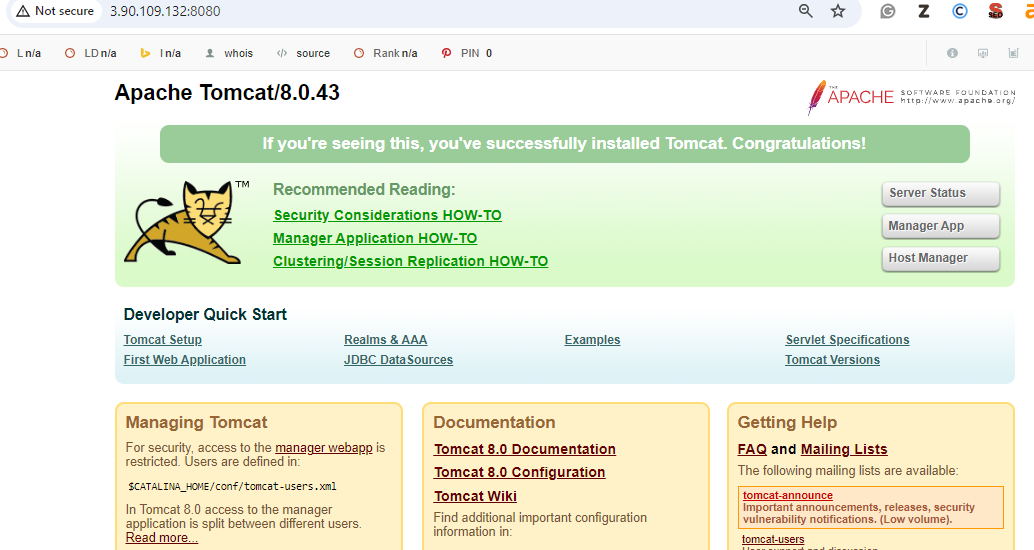

Step 5: Access the docker container

To access a Docker container from an EC2 instance and localhost, you need to ensure that the container is running and exposed on a port accessible from both the EC2 instance and localhost. Once the container is running and the port is exposed, you can use the EC2 instance’s public IP or DNS name to access the container from the EC2 instance, and you can use localhost or public ip along with the mapped port to access the container from localhost.