Kubernetes – Taint and Toleration

A pod is a group of one or more containers and is the smallest deployable unit in Kubernetes. A node is a representation of a single machine in a cluster (we can simply view these machines as a set of CPU and RAM). A node can be a virtual machine, a physical machine in a data center hosted on a cloud provider like Azure.

When a user runs the below-given pod creation command then the request is sent to the API server. The scheduler is always watching the API server for new events, it identified the unassigned pods and decides which node it should choose to deploy this pod-based on various factors like Node selector, Taints/Tolerations, Node Affinity, CPU and memory requirements, etc. Once Node is decided and sent to API server then kubelet make sure that pod is running on the assigned node.

kubectl (client ): kubectl create -f <pod-yaml-file-path>

Need Of Taint and Toleration

Nodes with different Hardware: If you have a node that has different hardware (example: GPU ) and you want to schedule only the pods on it which need GPU. Example: Consider there are 2 applications APP 1: A simple dashboard application and APP 2: A data-intensive application both has different CPU and memory requirements. APP1 does not require much memory and CPU whereas APP 2 needs high memory and CPU (GPU machine ). Now with help of taints and tolerations + Node affinity, we can make sure that APP 2 is deployed on a node that has high CPU and memory, while APP1 can be scheduled on any Node with low CPU and Memory.

Limit the number of pods in a node: If you want a node to schedule a certain number of pods to reduce the load on that node then Taints/Tolerations + Node Affinity can help us achieve it. Example: Consider there is a pod that consists of a database application that needs to be fast in queries the data and highly available. So, we will dedicate a node with high memory and CPU for this pod. Now the node will have only one pod in it, which makes it faster and more efficient to use node resources.

-

Node affinity makes sure that pods are scheduled in particular nodes.

-

Taints are the opposite of node affinity; they allow a node to repel a set of pods.

-

Toleration is applied to pods and allows (but does not require) the pods to schedule onto nodes with matching taints.

Let’s understand this with an example: Consider there is a Person N1 and Mosquito P1. Taint Example: Person N1 applied a repellent (taint) so now Mosquito P1 won’t be able to attack Person N1. Now Let’s suppose there is Wasp P2 which tries to attack Person N1

Toleration Example: Wasp P2 is tolerant to repellent, hence has no effect and a Person N1 would be attacked. Here 2 things decide whether a mosquito or wasp can land on a person: Taint (Repellent ) of the Mosquito P1 and Tolerance of the Wasp P2

In the Kubernetes world, Persons correspond to Nodes, and the Mosquito, and Wasp correspond to Pods.

Example

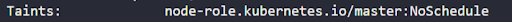

Case 1: Taint Node 1 (Blue)

Since Pods are not tolerated so none of them would be scheduled on node 1

Case 2

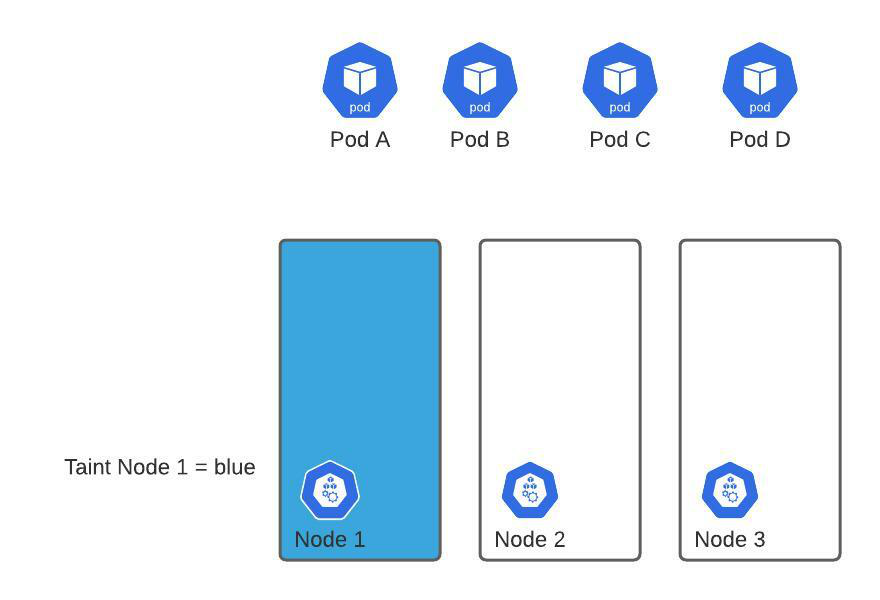

We add tolerance to pod D. Now only Pod D will be able to schedule on Node 1

Taints and Tolerations

Taints are a property of nodes that push pods away if they are not tolerate to node taint. Like Labels, multiple taints can be applied to a node.

Note

How can we enable certain pods to be scheduled on tainted nodes ?

By specifying which pods are tolerant to specific taint; we add tolerations to certain pods.

Tolerations are set to pods, and allow the pods to schedule onto nodes with matching taints. Taints and tolerations have nothing to do with security.

Syntax

kubectl taint nodes node-name key=value:taint-effect

Taint-effect:

-

NoSchedule: Pods will not be scheduled on the node unless they are tolerant. Pods won’t be scheduled, but if it is already running, it won’t kill it. No more new pods are scheduled on this node if it doesn’t match all the taints of this node.

-

PreferNoSchedule: Scheduler will prefer not to schedule a pod on taint node but no guarantee. Means Scheduler will try not to place a Pod that does not tolerate the taint on the node, but it is not required.

-

NoExecute: As soon as, NoExecute taint is applied to a node all the existing pods will be evicted without matching the toleration from the node.

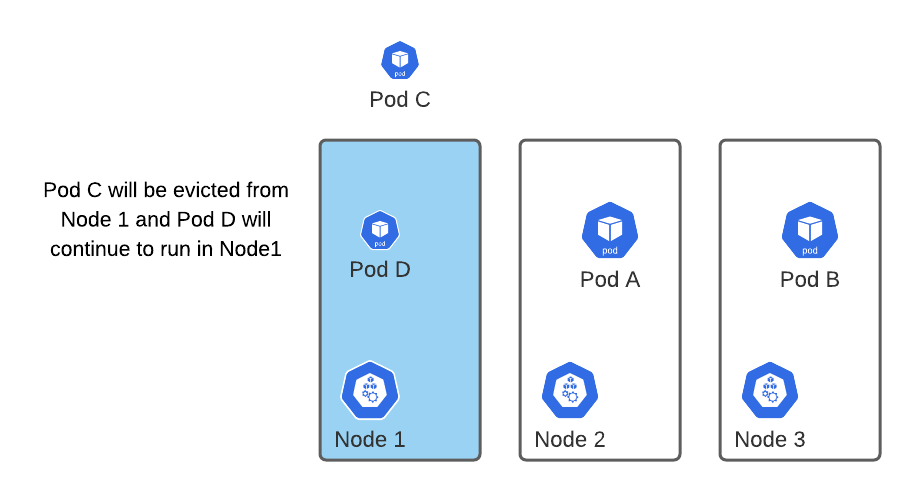

Example of NoExecute Taint effect

Currently, none of the pods is tolerant to blue.

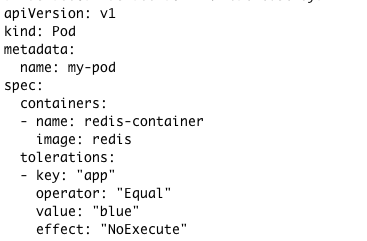

Pod D YAML file

-

Line 1-2 : v1 of Kubernetes Pod API. By kind, Kubernetes knows which component to create.

-

Line 3-4: metadata provides info that does not influence how the pod behaves, it is used to define the name of the pod and few labels(which will be used later by controllers )

-

Line 5: spec we define containers here. The pod can have multiple containers

-

Line 6-8: container name here is redis-container, image Redis will be pull from the container registry.

-

Line 7: operator default value is Equal. A toleration “matches” a taint if the keys are the same and the effects are the same, and: operator = Exists (in which case no value should be specified). operator = Equal and the values are equal. If keys are empty and the operator exists then it matches all the keys and values (i.e will tolerate everything effect is the type of taint-effect, here we chose NoExecute effect.

Now taint node 1 to blue

kubectl taint nodes node1 app=blue:NoExecute

Here, node1 –> name of the node on which taint will be applied. The app=blue:NoExecute –> key-value pair : Type of taint effect. This means that all the existing pods that do not tolerate the taint will be evicted.

Pod C will be evicted from Node 1, as it is not tolerant to taint blue.

Quote

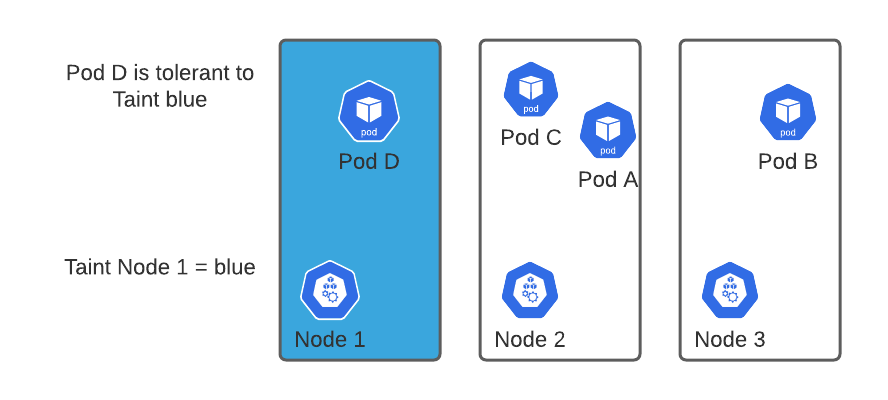

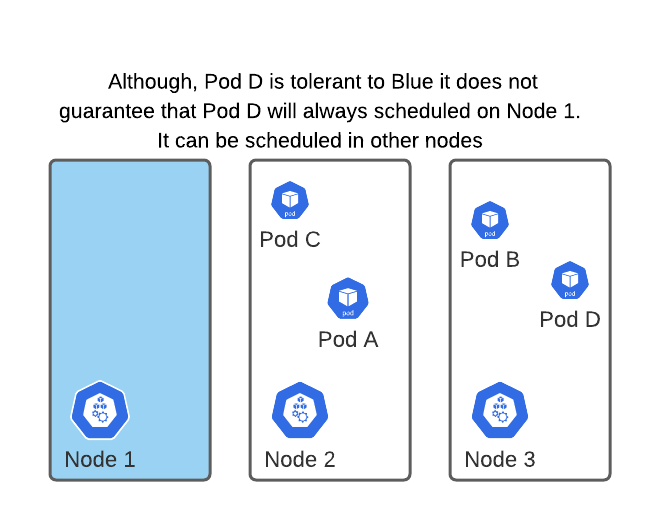

In the above example, Node D was scheduled on Node 1. What if it was scheduled on another node? Is it possible ?

Yes

Quote

Taints/Tolerations + Node Affinity = Assures that a specific pod can only schedule on a specific node only and No other pods can be scheduled in tainted nodes.Example o

Note

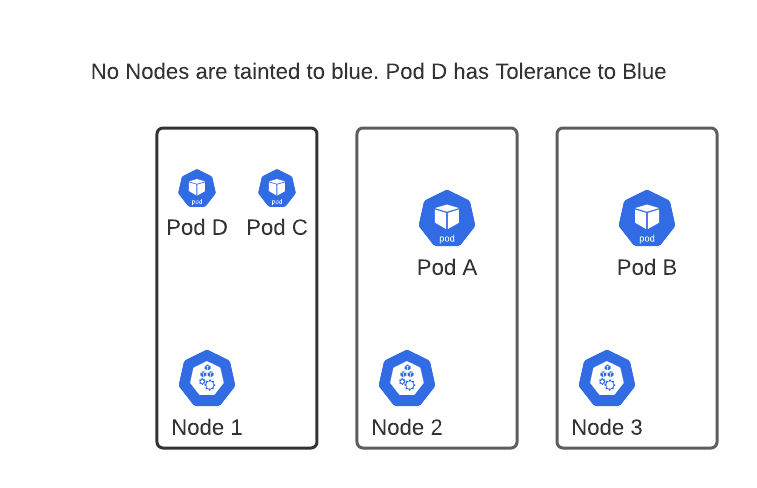

The master node does not have any pods in it. Because when cluster is created the Kubernetes taints its master node so no pods are scheduled on master node.

kubectl describe node kubemaster | grep Taints

Output