Introduction to Kubernetes (K8S)

Kubernetes is an open-source platform that manages Docker containers in the form of a cluster. Along with the automated deployment and scaling of containers, it provides healing by automatically restarting failed containers and rescheduling them when their hosts die. This capability improves the application’s availability.

What is Kubernetes (k8s) ?

Kubernetes is an open-source Container Management tool that automates container deployment, container scaling, descaling, and container load balancing (also called a container orchestration tool). It is written in Golang and has a vast community because it was first developed by Google and later donated to CNCF (Cloud Native Computing Foundation). Kubernetes can group ‘n’ number of containers into one logical unit for managing and deploying them easily. It works brilliantly with all cloud vendors i.e. public, hybrid, and on-premises.

Benefits of Using Kubernetes

Kubernetes simplifies the orchestration of containerized applications, making it an essential tool in DevOps. The DevOps Engineering – Planning to Production course provides an in-depth introduction to Kubernetes and its integration into DevOps workflows.

1. Automated deployment and management

-

If you are using Kubernetes for deploying the application then no need for manual intervention kubernetes will take care of everything like automating the deployment, scaling, and containerizing the application.

-

Kubernetes will reduce the errors that can be made by humans which makes the deployment more effective.

2. Scalability

- You can scale the application containers depending on the incoming traffic Kubernetes offers Horizontal pod scaling the pods will be scaled automatically depending on the load.

3. High availability

- You can achieve high availability for your application with the help of Kubernetes, and also it will reduce the latency issues for the end users.

4. Cost-effectiveness

- If there is unnecessary use of infrastructure the cost will also increase kubernetes will help you to reduce resource utilization and control the over provisioning of infrastructure.

5. Improved developer productivity

- Developer can concentrate more on the developing part kubernetes will reduce the efforts of deploying the application.

Deploying and Managing Containerized Applications With Kubernetes

Follow the steps mentioned below to deploy the application in the form of containers.

Step 1

Install kubernetes and setup kubernetes cluster there should be minimum at least one master node and two worker nodes you can set up the kubernetes cluster in any of the cloud which are providing the kubernetes as an service.

Step 2

Know create deployment manifestfile you can create this manifests in the manifest you can specify the exact number of pods are required and what the container image and what types of resources are required after completion of writing the manifestfile apply the file using kubectl command.

Step 3

After creating the pods know you need to expose the service to the outside of the for that you need to write one more manifestfile which contains service type (e.g., LoadBalancer or ClusterIP), ports, and selectors.

Use cases of Kubernetes in real-world scenarios

Following are some of the use cases of kuberneets in real-world scenarios

-

E-commerce: You deploy and manage the e-commerce websites by autoscaling and load balancing you can manage the millions of users and transactions.

-

Media and entertainment: You can store the static and dynamic data can deliver it to the across the world with out any latency to the end users.

-

Financial services: kubernetes is well suited for the sinical application because of the level of security it is offering.

-

Healthcare: You can store the data of patient and take care the outcomes of the health of patient.

Comparing Kubernetes with Other Container Orchestration Platforms

| Feature | Kubernetes | Docker Swarm | OpenShift | Nomad |

|---|---|---|---|---|

| Deployment | Containers are deployed using the kubectl CLI, with all configurations defined in manifests. |

Containers are deployed using a docker-compose file that contains all necessary configurations. |

Containers are deployed using manifests or the OpenShift CLI. | HCL (HashiCorp Configuration Language) file is required for deploying containers. |

| Scalability | Kubernetes manages heavy incoming traffic by scaling pods across multiple nodes. | Scaling is supported but less efficient than Kubernetes. | Scaling is supported but less efficient than Kubernetes. | Scaling is supported but less efficient than Kubernetes. |

| Networking | Different types of plugins can be used to enhance networking flexibility. | Simple to use, which makes it easier compared to Kubernetes. | Offers a more advanced networking model. | Supports integration with various networking plugins for flexibility. |

| Storage | Supports multiple storage options like Persistent Volume Claims (PVCs) and cloud-based storage. | Local storage can be used more flexibly. | Supports both local and cloud storage options. | Supports both local and cloud storage options. |

The Future of Kubernetes

Kubernetes is a container orchestration tool which has been transfer a lot from the time it released in to the market it is continually upgrading to compete with the other container orchestration platforms. As it continues to evolve, Kubernetes is poised to play an even more significant role in shaping the future of technology. Following are some of the key trends which shape the kubernetes for the future.

-

AI-Powered Automation and AutoML

-

Edge Computing and Hybrid Cloud Environments

-

Data Fabric and Data Governance

-

Multi-Cloud and Cloud-Native Applications

-

Security and Compliance

-

Sustainability and Resource Optimization

Features of Kubernetes

-

Automated Scheduling – Kubernetes provides an advanced scheduler to launch containers on cluster nodes. It performs resource optimization.

-

Self-Healing Capabilities – It provides rescheduling, replacing, and restarting the containers that are dead.

-

Automated Rollouts and Rollbacks – It supports rollouts and rollbacks for the desired state of the containerized application.

-

Horizontal Scaling and Load Balancing – Kubernetes can scale up and scale down the application as per the requirements.

-

Resource Utilization – Kubernetes provides resource utilization monitoring and optimization, ensuring containers are using their resources efficiently.

-

Support for multiple clouds and hybrid clouds – Kubernetes can be deployed on different cloud platforms and run containerized applications across multiple clouds.

-

Extensibility – Kubernetes is very extensible and can be extended with custom plugins and controllers.

-

Community Support - Kubernetes has a large and active community with frequent updates, bug fixes, and new features being added.

Kubernetes Vs Docker

| Feature | Docker Swarm | Kubernetes |

|---|---|---|

| Developer | Developed by Docker Inc. | Developed by Google, now managed by CNCF |

| Auto-Scaling | No Auto-Scaling | Supports Auto-Scaling |

| Load Balancing | Performs Auto Load-Balancing | Load-Balancing settings must be manually configured |

| Rolling Updates | Performs rolling updates to containers directly | Performs rolling updates to Pods as a whole |

| Storage Volumes | Shares storage volumes with any other containers | Shares storage volumes between containers in pods |

| Logging & Monitoring | Uses 3rd party tools like ELK | Provides in-built tools for logging and monitoring |

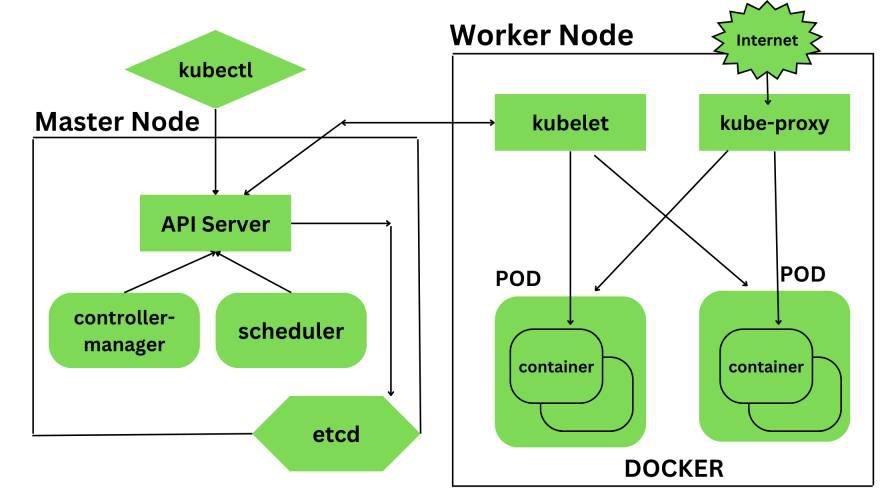

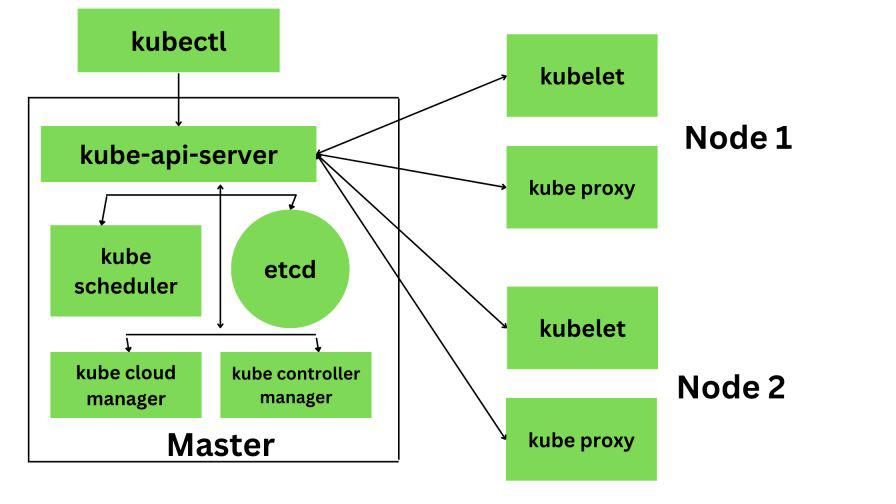

Architecture of Kubernetes

Kubernetes follows the client-server architecture where we have the master installed on one machine and the node on separate Linux machines. It follows the master-slave model, which uses a master to manage Docker containers across multiple Kubernetes nodes. A master and its controlled nodes(worker nodes) constitute a “Kubernetes cluster”. A developer can deploy an application in the docker containers with the assistance of the Kubernetes master.

Key components of Kubernetes

1. Kubernetes- Master Node Components

Kubernetes master is responsible for managing the entire cluster, coordinates all activities inside the cluster, and communicates with the worker nodes to keep the Kubernetes and your application running. This is the entry point of all administrative tasks. When we install Kubernetes on our system we have four primary components of Kubernetes Master that will get installed. The components of the Kubernetes Master node are:

API Server

The API server is the entry point for all the REST commands used to control the cluster. All the administrative tasks are done by the API server within the master node. If we want to create, delete, update or display in Kubernetes object it has to go through this API server.API server validates and configures the API objects such as ports, services, replication, controllers, and deployments and it is responsible for exposing APIs for every operation. We can interact with these APIs using a tool called kubectl. ‘kubectl’ is a very tiny go language binary that basically talks to the API server to perform any operations that we issue from the command line. It is a command-line interface for running commands against Kubernetes clusters.

Scheduler

It is a service in the master responsible for distributing the workload. It is responsible for tracking the utilization of the working load of each worker node and then placing the workload on which resources are available and can accept the workload. The scheduler is responsible for scheduling pods across available nodes depending on the constraints you mention in the configuration file it schedules these pods accordingly. The scheduler is responsible for workload utilization and allocating the pod to the new node.

Controller Manager

Also known as controllers. It is a daemon that runs in a non-terminating loop and is responsible for collecting and sending information to the API server. It regulates the Kubernetes cluster by performing lifestyle functions such as namespace creation and lifecycle event garbage collections, terminated pod garbage collection, cascading deleted garbage collection, node garbage collection, and many more. Basically, the controller watches the desired state of the cluster if the current state of the cluster does not meet the desired state then the control loop takes the corrective steps to make sure that the current state is the same as that of the desired state. The key controllers are the replication controller, endpoint controller, namespace controller, and service account, controller. So in this way controllers are responsible for the overall health of the entire cluster by ensuring that nodes are up and running all the time and correct pods are running as mentioned in the specs file.

etc

It is a distributed key-value lightweight database. In Kubernetes, it is a central database for storing the current cluster state at any point in time and is also used to store the configuration details such as subnets, config maps, etc. It is written in the Go programming language.

2. Kubernetes- Worker Node Components

Kubernetes Worker node contains all the necessary services to manage the networking between the containers, communicate with the master node, and assign resources to the containers scheduled. The components of the Kubernetes Worker node are:

Kubelet

It is a primary node agent which communicates with the master node and executes on each worker node inside the cluster. It gets the pod specifications through the API server and executes the container associated with the pods and ensures that the containers described in the pods are running and healthy. If kubelet notices any issues with the pods running on the worker nodes then it tries to restart the pod on the same node. If the issue is with the worker node itself then the Kubernetes master node detects the node failure and decides to recreate the pods on the other healthy node.

Kube-Proxy

It is the core networking component inside the Kubernetes cluster. It is responsible for maintaining the entire network configuration. Kube-Proxy maintains the distributed network across all the nodes, pods, and containers and exposes the services across the outside world. It acts as a network proxy and load balancer for a service on a single worker node and manages the network routing for TCP and UDP packets. It listens to the API server for each service endpoint creation and deletion so for each service endpoint it sets up the route so that you can reach it.

Pods

A pod is a group of containers that are deployed together on the same host. With the help of pods, we can deploy multiple dependent containers together so it acts as a wrapper around these containers so we can interact and manage these containers primarily through pods.

Docker

Docker is the containerization platform that is used to package your application and all its dependencies together in the form of containers to make sure that your application works seamlessly in any environment which can be development or test or production. Docker is a tool designed to make it easier to create, deploy, and run applications by using containers. Docker is the world’s leading software container platform. It was launched in 2013 by a company called Dot cloud. It is written in the Go language. It has been just six years since Docker was launched yet communities have already shifted to it from VMs. Docker is designed to benefit both developers and system administrators making it a part of many DevOps toolchains. Developers can write code without worrying about the testing and production environment. Sysadmins need not worry about infrastructure as Docker can easily scale up and scale down the number of systems. Docker comes into play at the deployment stage of the software development cycle.

Application of Kubernetes

-

Microservices architecture: Kubernetes is well-suited for managing microservices architectures, which involve breaking down complex applications into smaller, modular components that can be independently deployed and managed.

-

Cloud-native development: Kubernetes is a key component of cloud-native development, which involves building applications that are designed to run on cloud infrastructure and take advantage of the scalability, flexibility, and resilience of the cloud.

-

Continuous integration and delivery: Kubernetes integrates well with CI/CD pipelines, making it easier to automate the deployment process and roll out new versions of your application with minimal downtime.

-

Hybrid and multi-cloud deployments: Kubernetes provides a consistent deployment and management experience across different cloud providers, on-premise data centers, and even developer laptops, making it easier to build and manage hybrid and multi-cloud deployments.

-

High-performance computing: Kubernetes can be used to manage high-performance computing workloads, such as scientific simulations, machine learning, and big data processing.

-

Edge computing: Kubernetes is also being used in edge computing applications, where it can be used to manage containerized applications running on edge devices such as IoT devices or network appliances.