Kubernetes – Replication Controller

With the help of the open-source container orchestration technology Kubernetes, software deployment, scalability, and management are mostly automated. Another name for Kubernetes is K8s. Google created Kubernetes, which is now overseen by the Cloud Native Computing Foundation. Even though it now works with CRI-O as well as Docker runtime, with which it was initially intended to work. Automating operational activities for container management is Kubernetes’ primary goal. It has built-in capabilities for deploying apps and rolling out necessary application modifications. Businesses like Google, Spotify, and Capital One currently use it.

How a ReplicationController Works ?

The main purpose of the Replication Controller is to make sure that a specific number of Pod replicas are being run at a particular time. The job of the replication controller is to make sure of the availability of a Pod or a set of Pods to perform any task. It is often represented as “RC”.

Working of ReplicationController

If there are multiple pods present at a time and are not being used then it is the Job of the ReplicationController to terminate the extra pods. If there are very few pods and there is no available pod to do the work then it is the responsibility of the Replication Controller to create more Pods. The pods which are created by the replication controller are replaced automatically if they fail, unlike the manually created pods. The job of a Replication controller is very similar to that of a Process Supervisor the only difference is that the Replication controller supervises multiple pods across various nodes whereas the Process supervisor will only check individual processes at only one node.

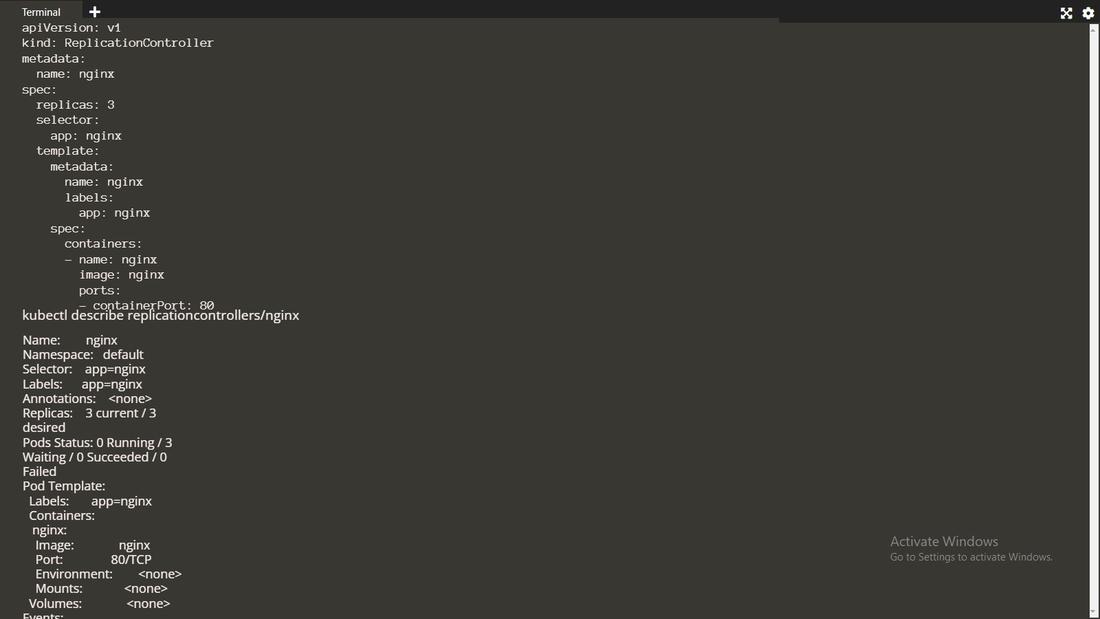

Running an Example ReplicationController

By using the following yaml file you can run the tomcat as a container in the Kubernetes cluster. Create a yaml file with the name tomcat.yaml and paste the following code in that file.

apiVersion: v1

kind: ReplicationController

metadata:

name: tomcat

spec:

replicas: 3

selector:

app: tomcat

template:

metadata:

name: tomcat

labels:

app: tomcat

spec:

containers:

- name: tomcat

image: tomcat:latest

ports:

- containerPort: 8080

After creating yaml file know to run the yaml file by using the following command:

kubectl. apply -f tomcat.yaml

If you get the output as the following:

replicationcontroller/tomcat created

Then that your replication controller is created successfully. You can check the status of replication by using the following command.

kubectl describe replicationcontrollers/tomcat

Writing a ReplicationController Manifest

ReplicationController requires the following content to write a yaml file.

-

apiVersion

-

Kind

-

spec

-

selector

-

template

-

labels

-

spec

Following is the sample yaml file to write the replication controller:

apiVersion: v1

kind: ReplicationController

metadata:

name: <replicationControllerName>

namespace: <nameSpaceName>

spec:

replicas: <noOfReplicas>

selector:

<key>: <value>

template: # POD Template

metadata:

name: <PODName>

labels:

<key>: <value>

spec:

- containers:

- name: <nameOfTheContainer>

image: <imageName>

ports:

- containerPort: <containerPort>

Labels on the ReplicationController

The labels which are used in the replication controller are the key-value pairs that can be attached to the resources and can be reused for recognizing the resources. Labels play a major role in the replication controller where you can identify and organize the pods. By using labels you can also manage the scheduling of pods to the required nodes.

Pod Selector

A pod selector will select the pods based on their labels. Pod selector works on the key/value pair where the selectors can be used in various Kubernetes resources like

-

Replication controller.

-

Deployments.

-

Deamonset.

Using ReplicationControllers with Services

A ReplicationController is a structure that enables you to easily create multiple pods, then make sure that that number of pods always exists. If a pod does crash, the ReplicationController can be merged different services. Replication Controllers and PODS are associated with labels. Creating “RC” with a count of 1 ensures that a POD is always available.

apiVersion: v1

kind: ReplicationController

metadata:

name: nginx-rc

spec:

replicas: 2

selector:

app: nginx

template:

metadata:

name: nginx

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx

ports:

- containerPort: 80

Responsibilities of the ReplicationController

Replication Controller will make sure that the desired no.of pods that are created by using Replication Controller is matching.

-

ReplicationController will balance the desired no.of pods with the pods which are running if not replication will generate the new pods to ensure the desired and running pods are matching.

-

ReplicationController will delete the pods if the running is more than the desired count.

-

The health of the pods is monitored by using the replication controller. If any pod failed in the health then the replication controller will replace thereby creating a new pod.

-

It ensures that the desired number of pods is always running, even if some pods fail or are deleted.

Kubernetes Replication Controller vs Replica Set

| Feature | Replication Controller | Replica Set |

|---|---|---|

| Purpose | Ensures the desired number of pods are running | Next-generation version of the Replication Controller |

| Functionality | Creates multiple pods and ensures that the specified number of pods are always running | Similar functionality, ensuring the desired number of pods are running |

| Association | Pods are associated with labels | Pods are associated with selectors |

| Selector Support | Supports only equality-based selectors (e.g., app=frontend) |

Supports both equality-based and set-based selectors (e.g., app in (frontend, backend)) |

| Use Case | Older version, still functional but less flexible | Newer and more flexible with advanced selector capabilities |

Kubernetes Replication Controller vs Deployment

| Feature | Replication Controller | Deployment |

|---|---|---|

| Purpose | Ensures the desired number of pods are always running | Recommended way to deploy Pods or ReplicaSets (RS) with advanced features |

| Pod Management | Automatically replaces crashed Pods | Manages Pods and RS with advanced capabilities |

| Rolling Updates | Does not support rolling updates | Supports rolling updates and rollouts |

| Scaling | Cannot scale Pods via commands | Can scale Pods via commands |

| Scaling Command | No specific command for scaling | Use: kubectl scale deployment [DEPLOYMENT_NAME] --replicas [NUMBER_OF_REPLICAS] |

| Traffic-based Scaling | Requires changes in the manifest file to scale based on traffic | Can scale dynamically based on traffic with commands |

Working with ReplicationControllers

Deleting a ReplicationController

We can delete any Replication Controller and all its pod by using the command kubectl delete. To more about Kubectl commands refer to the Kubernetes – Kubectl Commands

kubectl delete

The ReplicationController will become zero and will delete all the pods first before deleting the Replication Controller. There is a possibility to delete only the Replication Controller and not the pods, for this we need to specify the –cascade=orphan option with the kubectl delete command.

kubectl delete --cascade=orphan

This command will enable the process by scaling the Replication Controller to zero then waiting till the pods are deleted and then deleting the said replication controller. If this process stops without completion then it is restarted.